| Theme: Clinical Assessment and the OSCE | |||

|

||||||

| Examining the function of Mini-CEX as an assessment and learning tool: factors associated with the quality of written feedback within the Mini-CEX |

|

|||||

|

||||||

The Mini Clinical Evaluation Exercise (Mini-CEX) (Norcini, et al., 1995) has been implemented as a means of providing feedback in undergraduate medical education (e.g., Fernando, et al., 2008; Hill & Kendall, 2007), despite limited evidence to support its use in prevocational training. Following a direct observation of a student's performance in examining or managing a patient, a Mini-CEX assessor must complete a Mini-CEX assessment form by giving ratings on several domains of clinical performance and also providing written feedback. This study was aimed to examine factors associated with the quality of written feedback provided during the Mini-CEX sessions.

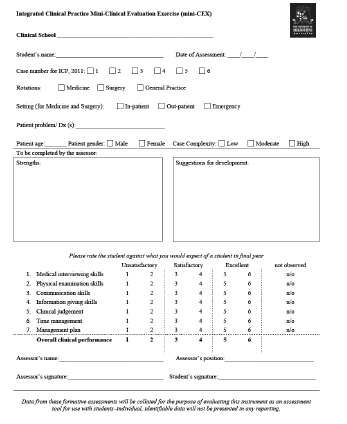

A Mini-CEX assessment form consists of information on students' clinical rotation, Mini-CEX setting, assessors' clinical position, clinical case complexity and patient's gender and age. There are seven domains of clinical performance assessed in a Mini-CEX, which are 1) medical interviewing, 2) physical examination, 3) communication, 4) information giving, 5) clinical judgement, 6) time management and 7) management plan. In addition to that, the students are also assessed on their overall clinical performance and provided with written feedback on their strengths and weaknesses.

The Mini-CEX assessment forms collected from 1427 Mini-CEX sessions in a large Australian medical school were analysed. The written feedback, both on students’ strengths and suggestions for development, was categorized and related to the ratings of clinical performance, clinical case complexity and assessors’ clinical position.

A predetermined coding manual was used to categorize the written feedback. Feedback on students' strengths was categorized as general and specific feedback. While feedback for development was grouped into general and specific suggestions. Due to a very low number of feedback comments that was more than just suggestions, this particular category was incorporated into "specific suggestions" category. A category of "no feedback" was added into both students' strengths and suggestions for development categorizations.

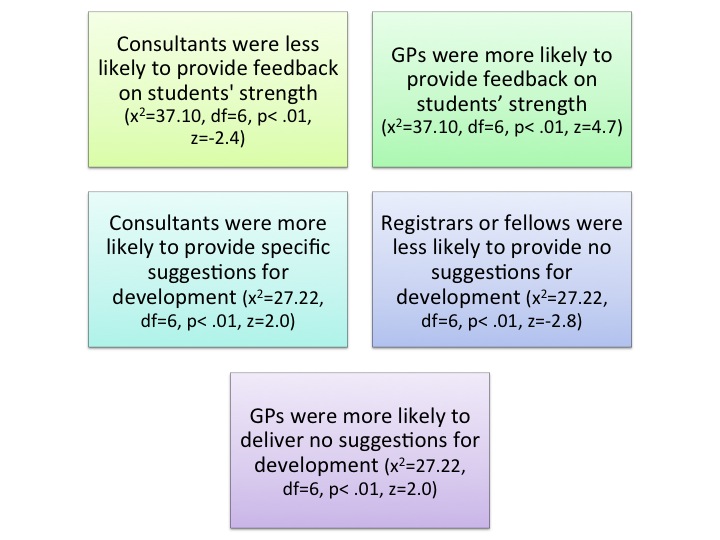

There were three categories of assessors' clinical position: consultant, general practitioner (GP) and registrar or fellow. Based on a chi-square analysis, a relationship between type of feedback and assessors' clinical position was identified:

The level of clinical case complexity was divided into low, moderate and high. A chi-square analysis was also conducted and it was found that the higher the clinical case complexity, the more likely for feedback on student strengths to be provided (x2=17.48, df=4, p< .01, z=-3.2).

The intercorrelation between scores of the seven domains of clinical performance was analysed using a Spearman correlation test and moderate to strong positive significant relationships were found (r=.59 to .79). The relationships between the ratings of students' clinical performance and the feedback that was provided were also investigated. A negative weak significant relationship was found between clinical performance ratings and feedback for students' development. Higher ratings were less likely to be associated with specific feedback for students' development (r=-22 to -.28).

- The lack of variation between domain scores may indicate that Mini-CEX is not making fine distinctions in specific areas of clinical performance.

- In order to optimize the function of Mini-CEX as a learning tool, it is necessary to provide both positive and negative feedback on every performance of a student.

- The quality of written feedback is influenced by the assessors’ clinical position and clinical case complexity.

- Standardization of Mini-CEX assessors through a structured training and adequate exposure to various clinical cases with different complexity are required to improve the quality of written feedback.

Assessors clinical position and clinical case complexity are shown to be two influential factors on written feedback in the Mini-CEX.

Fernando, N., Cleland, J., McKenzie, H., & Cassar, K. (2008). Identifying the factors that determine feedback given to undergraduate medical students following formative mini-CEX assessments. Medical Education, 42, 89-95.

Hill, F., & Kendall, K. (2007). Adopting and adapting the mini-CEX as an undergraduate assessment and learning tool. The Clinical Teacher, 4, 244-248.

Norcini, J.J., Blank, L.L., Arnold, G.K., & Kimball, H.R. (1995). The Mini-CEX (Clinical Evaluation Exercise): A preliminary investigation. Annals of Internal Medicine, 123, 795-799.

Send Email

Send Email